Difference between revisions of "Overview"

(→Products) |

(→Products) |

||

| Line 7: | Line 7: | ||

The selected ''personality'' determines what function Crawler will perform. | The selected ''personality'' determines what function Crawler will perform. | ||

| − | == Products == | + | == Crawler-based Products == |

| − | Crawler will become available in 2014 in three different | + | Crawler will become available in 2014 in three different flavors. |

* Low end: For most users, a 'standard' Crawler personality with a custom configuration will be all that is required (e.g. InDesign-to-XHTML, InDesign-to-EPUB). We'll have a growing range of ready-made [[Personality|''personalities'']] available. All that will be needed are some configuration changes to make the personality 'fit' their workflow. Our aim is to cover the needs of 90% of our users. | * Low end: For most users, a 'standard' Crawler personality with a custom configuration will be all that is required (e.g. InDesign-to-XHTML, InDesign-to-EPUB). We'll have a growing range of ready-made [[Personality|''personalities'']] available. All that will be needed are some configuration changes to make the personality 'fit' their workflow. Our aim is to cover the needs of 90% of our users. | ||

Revision as of 02:51, 30 December 2013

Overview

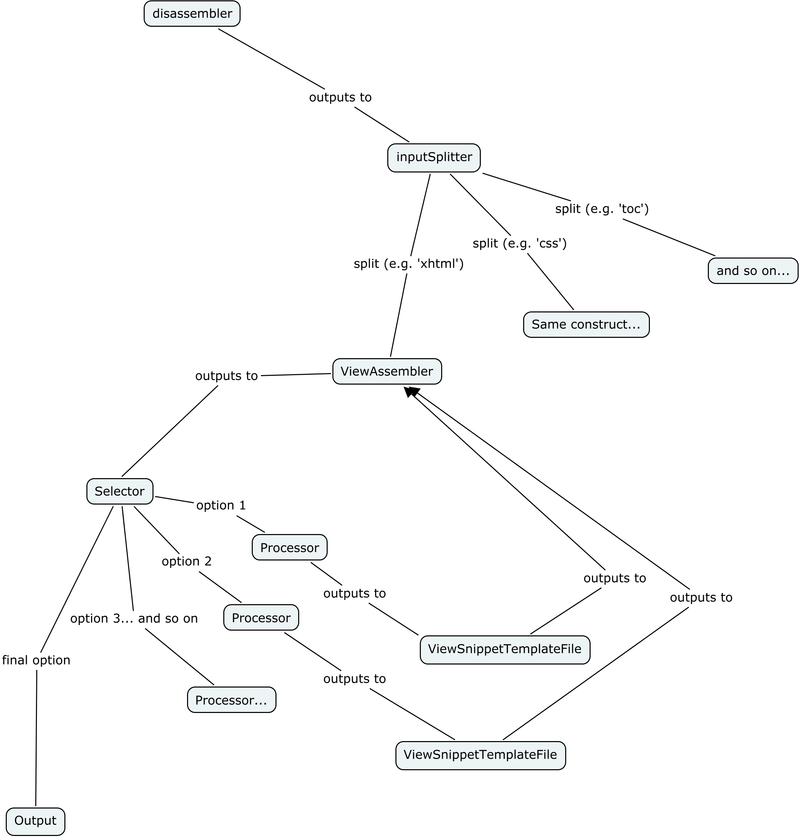

Crawler is designed along principles that are similar to the ones found in the [Data Flow Programming] paradigm.

Crawler all by itself does not perform any useful function. In order to become usable it needs to be extended with a personality.

The selected personality determines what function Crawler will perform.

Crawler-based Products

Crawler will become available in 2014 in three different flavors.

- Low end: For most users, a 'standard' Crawler personality with a custom configuration will be all that is required (e.g. InDesign-to-XHTML, InDesign-to-EPUB). We'll have a growing range of ready-made personalities available. All that will be needed are some configuration changes to make the personality 'fit' their workflow. Our aim is to cover the needs of 90% of our users.

These 'standard' Crawlers setups will be 'closed source'. If all goes well, the first Crawler standard personalities we'll have available will be InDesign-to-XHTML and InDesign-to-EPUB.

We'll also be able to organize training and provide ongoing support for customizing the standard personalities.

- Middle: For more advanced workflows, we'll have customizable Crawler versions that will come with 'open source' personalities.

We'll also be able to provide training and ongoing support for developing or customizing personalities. These Crawler versions will probably often be deployed in a server setup. The anticipated applications are automated conversions, automated web publishing, and automated back-end database updates.

- High end: For the most advanced setups we can also provide 'fully open source' versions of Crawler. This will allow total integration of Crawler into a corporate workflow.

Personality

One of the high-level components in a Crawler-based system is called a personality.

A personality is a high-level Crawler component which will take input data in some shape or form, and will process it into output data in some other form.

A few examples:

- InDesign-to-XHTML/CSS: takes in InDesign documents or books and outputs XHTML/CSS files.

- InDesign-to-EPUB: takes in InDesign documents or books, outputs EPUB.

- InDesign-to-Database input: takes in InDesign document or books, and updates a database with information extracted from the document(s).

When processing, input document(s) are pushed through a network of adapters provided by the personality; data is flowing in and out of the adapters.

If the personality were a hive, then the adapters would be the worker bees.

A personality is somewhat reminiscent of a Rube Goldberg-machine.

The initial adapters process the document, and take it apart into ever smaller chunks of data.

The reverse also happens: some adapters collate smaller chunks back into larger chunks.

These 'chunks of data' are referred to as granules.

Example: an adapter might take in a paragraph granule and split it into individual word granules. Another adapter further downstream might take a number of word granules and concatenate them back into a paragraph granules.

Some adapters perform some kind of processing on the granules they receive; they might change them in some way, discard them, count them, reorder them, create new granules based on previous granules...

Other adapters construct new granules based on template snippets. For example, some adapter could take in some raw text, and combine this raw text with a template snippet into some XML formatted granule.

The general idea is that the input data is broken apart into smaller entities, and then these smaller entities are put back together again a different shape, possibly performing a document conversion in the process.